Quick Overview of Kong AI Gateway: Kubernetes Deployment & LLM Integration

Kong offers multiple editions of its software:

- OSS

- Enterprise

- Konnect (SaaS dashboard for both OSS and Enterprise versions)

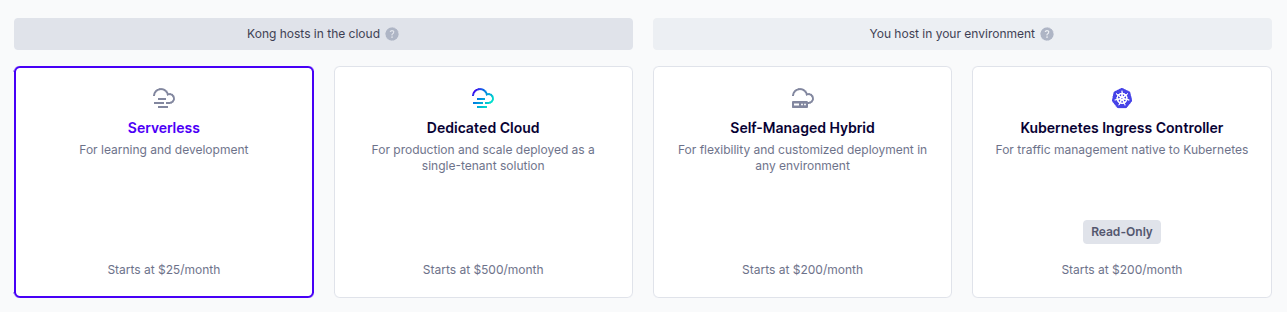

Konnect is a service for the management and analytics of the Kong Gateways. Konnect offers a control plane and two versions of the Kong deployment:

- Managed (Both control-plane and Kong Gateway)

- Self-hosted (hybrid where user deploys the gateway and control-plane is managed by Konnect)

Kong Gateway can work in multiple ways based on where it is deployed.

Kubernetes deployment:

- Ingress gateway (Konnect read-only)

- Proxy (Konnect can manage it or self-host management)

Other deployments:

- Proxy (Konnect can manage it or self-host management)

The OSS edition has more than enough features to run a powerful gateway. What makes Kong diverse is the plugin system. There are plugins for almost every case.

Plugins providing AI services:

- AI Proxy (OSS, Enterprise)

- AI Prompt Decorator (OSS, Enterprise)

- AI Prompt Guard (OSS, Enterprise)

- AI Prompt Template (OSS, Enterprise)

- AI RAG Injector (OSS, Enterprise)

- AI Rate Limiting Advanced (OSS, Enterprise)

- AI Request Transformer (OSS, Enterprise)

- AI Response Transformer (OSS, Enterprise)

- AI Proxy Advanced (Enterprise)

- AI Azure Content Safety (Enterprise)

- AI Sanitizer (Enterprise)

- AI Semantic Cache (Enterprise)

- AI Semantic Prompt Guard (Enterprise)

AI Proxy plugin

The AI Proxy plugin provides an AI Gateway for the OSS version. An example of the two LLMs configured is given below.

This example uses the Helm chart https://github.com/Kong/charts/tree/main/charts/kong (2.48.0).

dblessConfig:

config: |

_format_version: "3.0"

services:

- name: openai

url: https://api.openai.com

routes:

- name: openai

paths:

- /openai

methods:

- POST

plugins:

- name: ai-proxy

config:

route_type: "llm/v1/chat"

auth:

header_name: "Authorization"

header_value: "Bearer <key>"

model:

provider: openai

name: gpt-4

options:

max_tokens: 512

temperature: 1.0

- name: anthropic-chat

url: https://api.anthropic.com

routes:

- name: anthropic

paths:

- /anthropic

methods:

- POST

plugins:

- name: ai-proxy

config:

route_type: "llm/v1/chat"

auth:

header_name: "x-api-key"

header_value: "<bearer>"

model:

provider: anthropic

name: claude-3-5-sonnet-latest

options:

max_tokens: 512

temperature: 1.0

anthropic_version: 2023-06-01

Kong AI Gateway configuration

This makes available two endpoints:

example.com/openaiexample.com/anthropic

Where example.com acts as a Gateway to OpenAI and Anthropic.

Implementing prompt guard

Extending the example above:

dblessConfig:

config: |

_format_version: "3.0"

services:

- name: openai

url: https://api.openai.com

routes:

- name: openai

paths:

- /openai

methods:

- POST

plugins:

- name: ai-proxy

config:

route_type: "llm/v1/chat"

auth:

header_name: "Authorization"

header_value: "Bearer <key>"

model:

provider: openai

name: gpt-4

options:

max_tokens: 512

temperature: 1.0

- name: ai-prompt-guard

config:

allow_all_conversation_history: true

allow_patterns:

- ".*(P|p)ears.*"

- ".*(P|p)eaches.*"

deny_patterns:

- ".*(A|a)pples.*"

- ".*(O|o)ranges.*"

The Prompt Guard plugin matches lists of regular expressions to requests through AI Proxy.

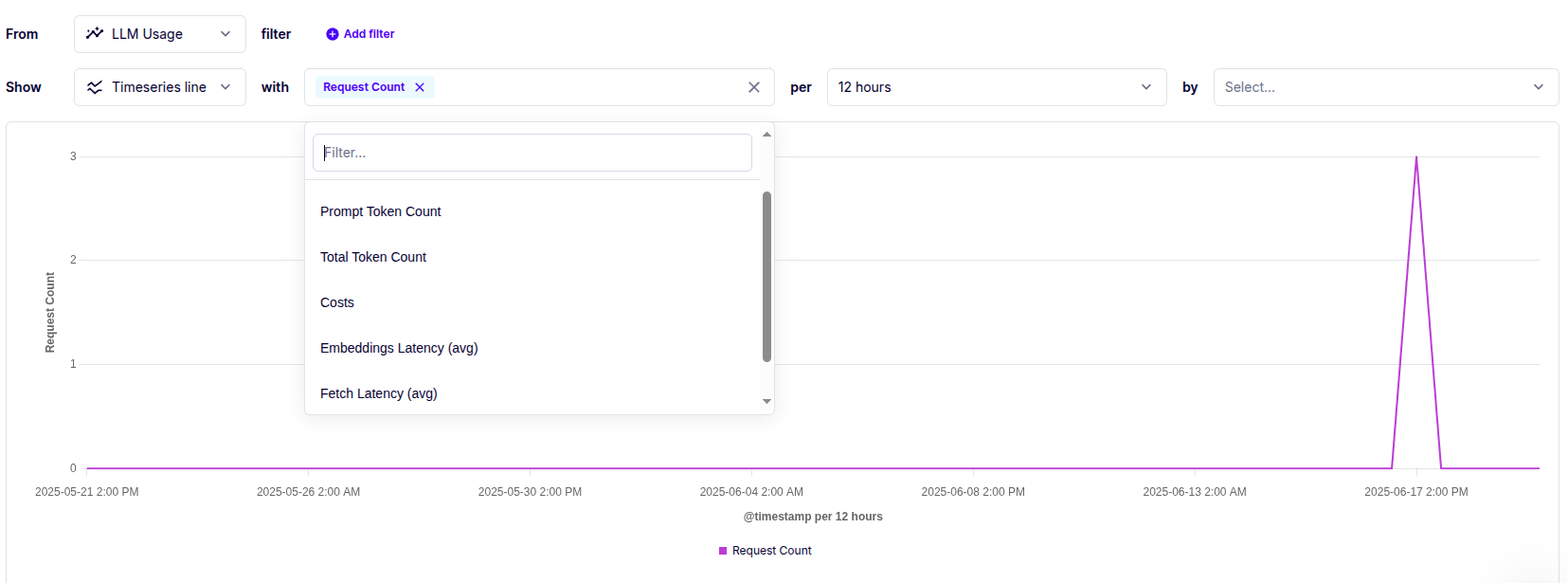

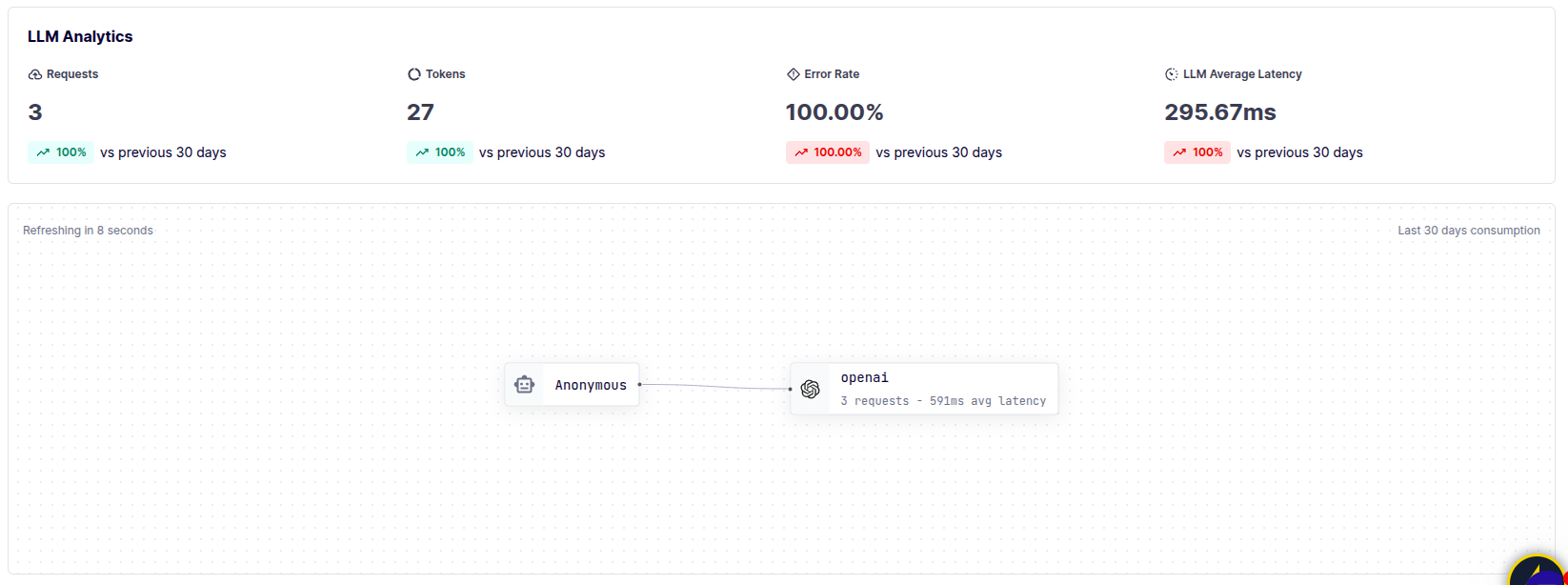

LLMs metrics (enterprise only)

Metrics are only available for the AI Advanced Proxy, which is Enterprise only. Anyhow, these are nice features, so we will give a short overview.

Metrics available:

- Costs

- Latency

- Error

Also, visualization is available for the upstream LLMs with a quick information overview.

Interested in other AI Gateways? Read more about the Traefik AI gateway, what it offers, and how to deploy it.