Containerization explained

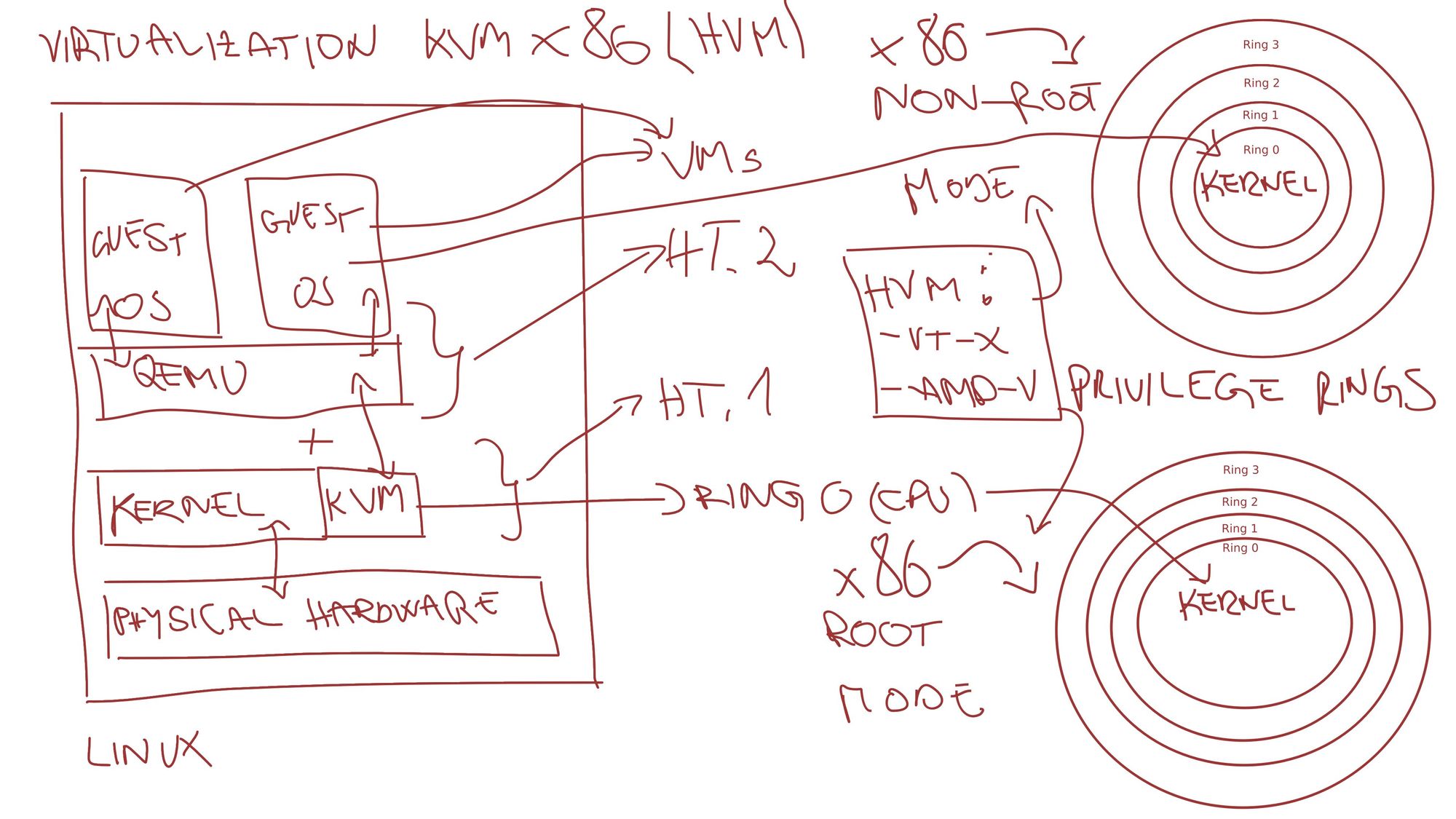

In the first part, virtualization on the Linux and virtualization concepts were introduced.

Containerization

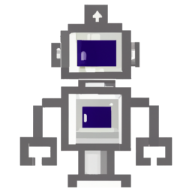

Let's analyze an overview of differences between the virtualization and containerization.

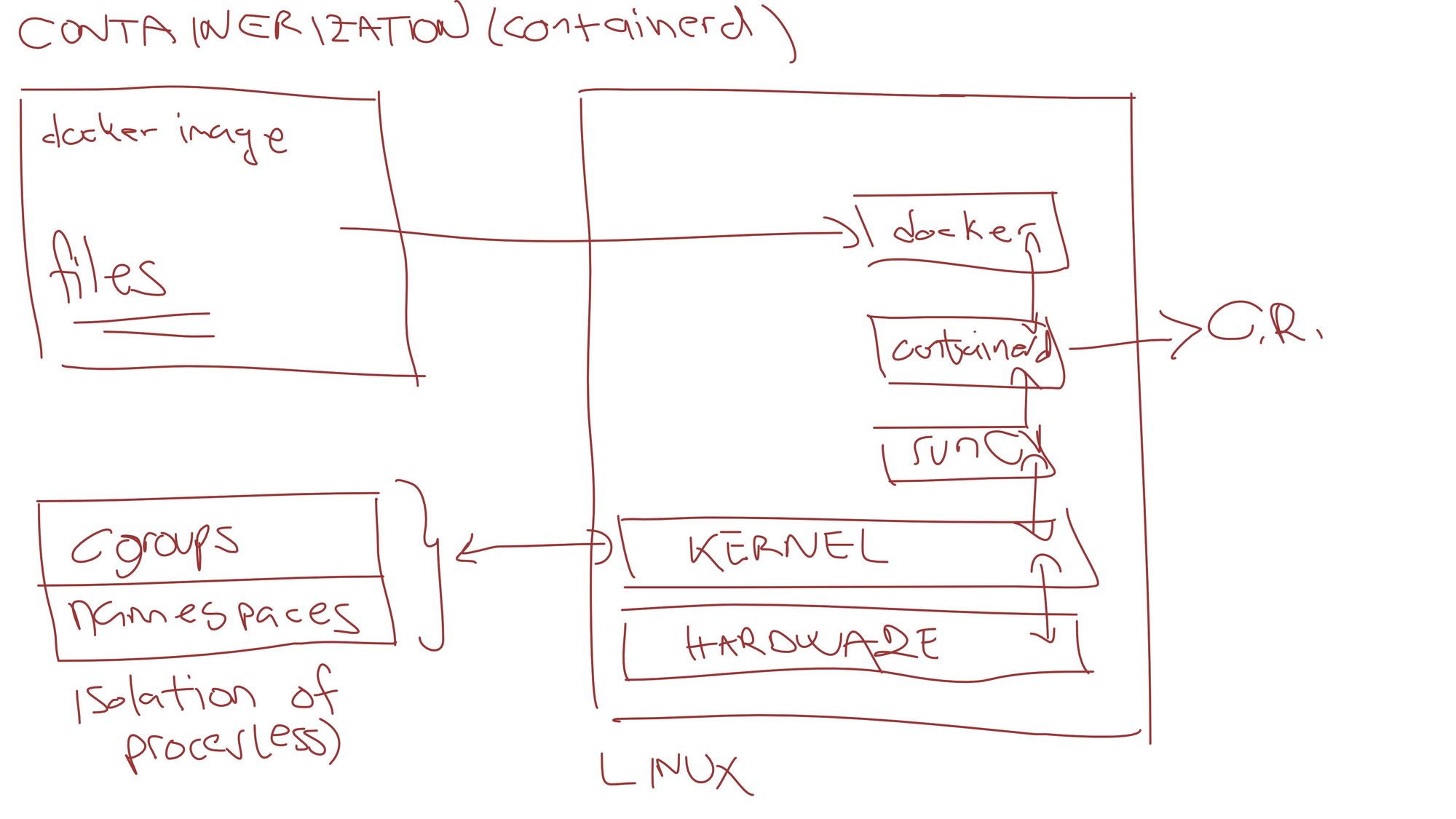

On the other hand, as can be seen from the diagram above, container isolation is handled differently but first, let's dig into the container itself.

The container is a runtime of a container image that has a restricted and isolated set of resources on the host operating system which makes the container think it has its own resources.

Container images are a collection of files and metadata that are mounted as a file system at the start of the container runtime.

Based on the container image we define what will be located in the file system of the container runtime.

Containers directly communicate with the Host kernel hence the difference between the virtual machine and the container.

Another difference is that there is no hypervisor. Instead of a hypervisor, there is a Container Runtime engine which is used to spawn the container.

So in summary Container runtime speaks directly to the kernel over a set of isolated and restricted resources.

$ docker run alpine sleep 3600

Terminal from the host starting Alpine container

$ ps aux

qdnqn 35545 0.0 0.1 1569740 48908 pts/8 Sl+ 17:02 0:00 docker run alpine sleep 3600

root 35621 0.0 0.0 710900 8060 ? Sl 17:02 0:00 /usr/bin/containerd-shim-runc-v2 -namespace moby -id ...

root 35640 0.0 0.0 1588 4 ? Ss 17:02 0:00 sleep 3600

... [ALL OTHER PROCESSES ON THE HOST]Terminal from the host listing active processes

/ # ps aux

PID USER TIME COMMAND

1 root 0:00 sleep 3600

13 root 0:00 /bin/sh

19 root 0:00 ps auxTerminal from the container listing active processes

As we can see the process from the container is running in the kernel of the host - hence communicating directly with the container.

We can easily send signals from the host to this process with ID 35640.

Containers are based on the cgroups and namespaces. These two are part of the Linux kernel.

Cgroups are used to restrict access to the host OS resources to this group of processes.

Namespaces are used to manipulate the view of a group of processes on the file system, users, networks, etc.

Combining cgroups and namespaces you get Container runtime which is restricted and isolated.

This way all containers can't see each other processes. It also isolates the process from the host itself. Only the allowed set of processes that are in the same cgroup are visible to that container.

Let's analyze the diagram below which pictures the process of starting a container using the containerd runtime engine.

Container Runtime engines rely on the runC to manage containers on Linux.

There are multiple container runtime engines:

- containerd

- cri-o

All talk here is about Linux containers - since the feature started from here. On the other side, Windows containers are also a thing.

Linux vs Windows containers

Containers rely on the underlying kernel of the host operating system to execute the processes themselves. The binary inside the container must be compatible with the kernel running on the host operating system.

So it's not possible to run Windows containers on Linux and vice-versa.

Usually what happens when running the Docker Desktop is that you are running Linux virtual machine on a Windows machine and hence you can run Linux containers.

In simpler words:

If you take a Windows-based container image that holds for example cmd.exe which is compiled to work on the Windows OS and you run that container on the Linux system it won't work.

If you have software from Windows that is compiled to be compatible with the Linux operating system like you have ASP Core images then you can run that particular container hosting your ASP Core app.

➜ ~ docker run -it mcr.microsoft.com/windows/nanoserver:ltsc2022 cmd.exe

Unable to find image 'mcr.microsoft.com/windows/nanoserver:ltsc2022' locally

ltsc2022: Pulling from windows/nanoserver

docker: no matching manifest for linux/amd64 in the manifest list entries.

Running Windows container on Linux.

It doesn't work. It makes sense if we take into account all said above.

Docker

Docker built a set of tools that are using underlying systems talked about above to give end users ease of use.

It's most responsible for the popularity of the containers since it had a huge adoption.

The focus of this article will be on Docker running in Linux. Other technologies/tools available are:

- Podman

- LXD

- Buildah

- rkt

- Docker Desktop

- etc.

We need to introduce a few terms:

- Docker daemon

- Docker image

- Docker container runtime

A Docker daemon is a daemon that is responsible for the hard work. It manages containers and exposes APIs that can talk to the outside world.

For example, running the Docker CLI terminal docker ps will ping the Docker daemon to list running containers.

The usual case is that the Docker daemon and CLI are located on the same host but it can be that the daemon is on the remote host.

A docker image is a text file that contains the definition of metadata and the filesystem of the container.

In itself it represents nothing but when given to the Container Runtime engine it starts the Docker container runtime.

Filesystem of the container

The container filesystem is based on the Union filesystem.

It allows files and directories of separate file systems, known as branches, to be transparently overlaid, forming a single coherent file system. Contents of directories which have the same path within the merged branches will be seen together in a single merged directory, within the new, virtual filesystem.

https://en.wikipedia.org/wiki/UnionFS

How do we get this layered filesystem? Simply the Docker image represents the file system. Every line in the Dockerfile is a layer of the filesystem.

When the Docker container is started every layer of the filesystem is read-only. On top of that, a temporary layer is added which is read-write. This allows the container to use read-write operations.

When the container is stopped the layer is destroyed and running the container again will give us a clean slate. The last layer is ephemeral and will not live enough to see the restarts or new runs.

Persistence is handled via permanent storage. Docker containers can mount volumes created via Docker CLI or Host shared locations.

Networking

The last aspect of this article will be briefly about networking. Since in all aspects containers rely on the host operating system - Networking shouldn't be any different. By default, if not specified explicitly, docker uses the docker0 network interface created on the startup of the daemon.

This interface is a simple Linux bridge that acts similarly to an L2 switch.

Docker containers only see networks in their kernel namespace.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:25 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3676 (3.5 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

Running ifconfig from the inside of the container

Only two interfaces are listed in the container:

- eth0 (ethernet)

- lo (loopback)

But on the host:

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:efff:fe7c:afae prefixlen 64 scopeid 0x20<link>

ether 02:42:ef:7c:af:ae txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 20 bytes 2975 (2.9 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Running ifconfig from the host operating system

All the containers get the IP from the 172.17.0.0/16 pool. Hence all containers in the same network can communicate via docker0 to other entities/containers. The lo address will talk to the same container - same as in the host operating system.

Conclusion

Containers are easy to spin off - faster than virtual machines, easy to define, and easy to manage. The reader's next travel point should be about Kubernetes. When understanding:

- Virtual machines

- Containers

It is pretty much easy to navigate in the world of the Kubernetes. All the basics stay the same.

This is the end of series for the Virtualization and Containerization.

If you are interested more in Virtualization checkout the previous issue.