Virtualization explained

What is virtualization?

Peeling the layers of virtualization is important to build the foundation of understanding containerization.

Laying out some foundation of the operating systems - based on Unix.

Any program (process) running in the operating system can be running in the two spaces:

- User space

- Kernel space

Program running in the user space doesn't have direct access to the underlying hardware.

To access the resources, a process in the user space can invoke a system call to access those resources.

System calls transfer the process from the user space to the kernel space - which allows the process to access those resources. User space and kernel space is only limiting which CPU instructions can be performed by the process.

The manager that makes sure that everyone behaves is the Kernel. The kernel is the heart of the operating system. The kernel makes sure that the process has enough level of access to get the requested resource and if all is good then allows it to access.

An example of a system call is any file write/read action on the filesystem of the operating system.

The kernel handles a lot of things, some of them are:

- Process resource sharing (CPU, Memory, etc.)

- Talking directly to the hardware

- Resource isolation

- Orchestration

So for the program to interact with the hardware is via Kernel using system calls.

When the process is started it gets its own set of resources - kernel assures of that.

Other processes cannot access the other process's resources if not configured explicitly.

Now to the virtualization.

Virtualization explained

Virtualization allows sharing of the hardware between the Virtual machines which are running operating systems of their own.

Resource virtualization can be done over:

- CPU

- Memory

- Storage

- Network

To virtualize the hardware some kind of manager is needed. The manager in the case of virtualization is called Hypervisor. It handles resource sharing/access/orchestration between the virtual machines.

There are two types of hypervisors:

- Type 1 - Running directly on the hardware / Hardware hypervisors

- Type 2 - Running on top of the other operating system / Software hypervisors

Hardware hypervisors are running on the hardware itself and provide the end user options to virtualize the hardware itself.

VMware ESXi is an example of a bare metal hypervisor.

Software hypervisors are the ones that are running on top of the existing operating system.

VirtualBox is an example of a type 2 hypervisor.

The main difference between the type 1 and 2 hypervisors is that the latency on type 2 is bigger - since type 2 has an additional layer of abstraction from the underlying operating system.

Two main terms in the virtualization world are Host and Guest. The host is the hypervisor.

Type 2 hypervisors use the software virtualization which translates the system calls from the guest OS and then executes them on the host kernel itself.

Modern CPUs have the ability of the Hardware virtualization assistance and popular ones are:

- VT-X from the Intel

- AMD-V from the AMD

Hardware virtualization assistance allows the CPU to execute directly system calls from the guest OS without translation. We will see about that later on.

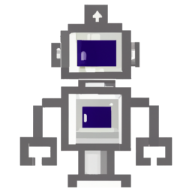

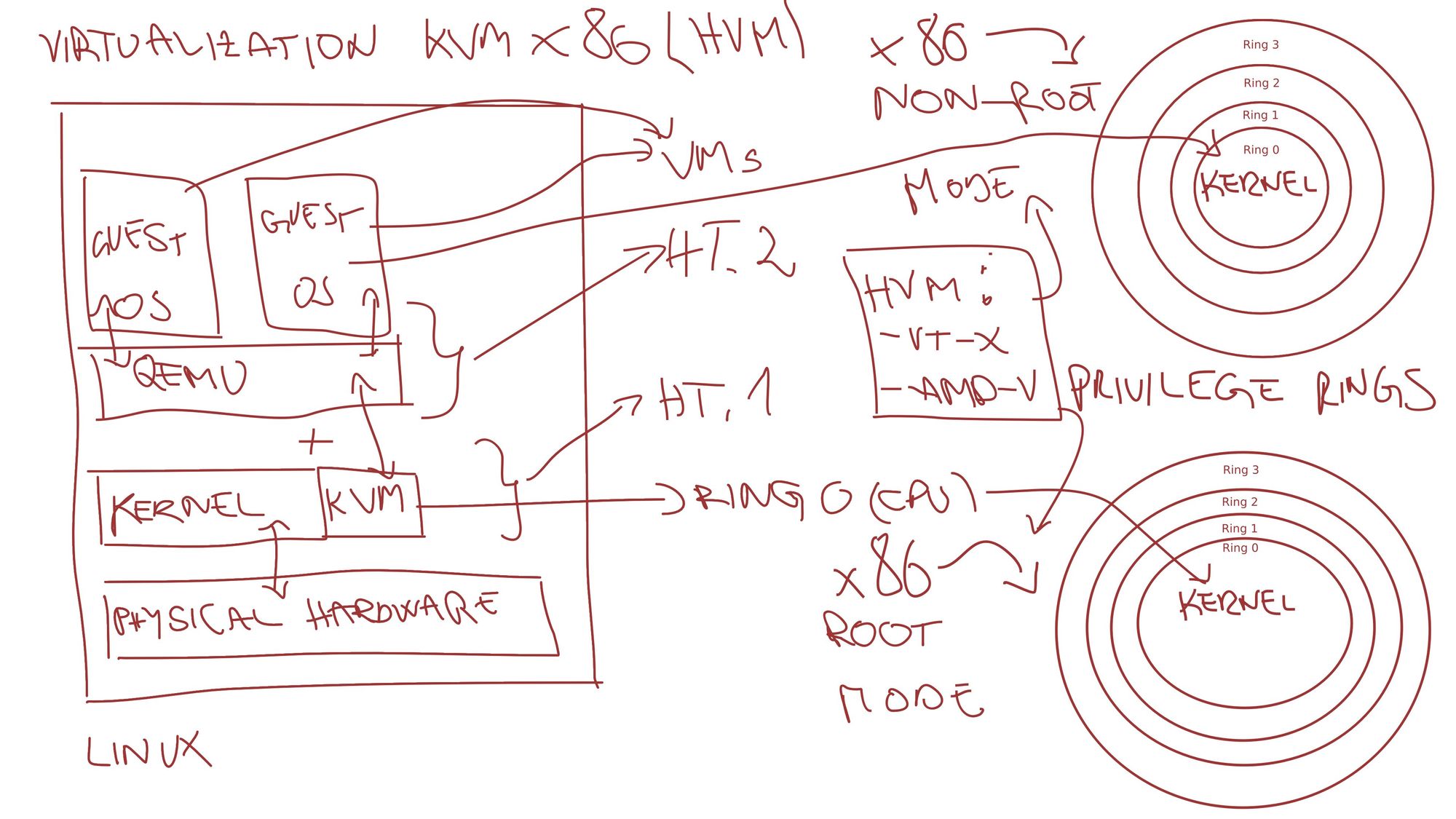

KVM and QEMU on the x86/x86-64 (Hardware-assisted)

Let's analyze the usual setup of the virtualization on the Linux machines using KVM and QEMU on the x86/x86-64 CPU family and see how is it placed in the virtualization concept.

A privilege level in the x86 instruction set controls the access of the program currently running on the processor to resources such as memory regions, I/O ports, and special instructions.

https://en.wikipedia.org/wiki/Protection_ring

A quote from Wikipedia is explaining how the CPU is actually protected from the running processes in user space. The user-space process is running in the highest ring usually - hence not all CPU instructions are allowed to be used. The kernel is running in the Ring 0 - hence all CPU instructions are allowed to be used by the kernel.

In the beginning type 2 hypervisors used software translation before executing the system calls on the host kernel. It was performance heavy for the processes that use a lot of system calls.

Later on, Intel and AMD created Hardware-assisted virtualization technologies (VT-x, AMD-v). Not all CPUs have this technology.

What hardware-assisted virtualization enables is that the CPU can operate in two modes:

- Root mode

- Non-root mode

The kernel is running in the root mode and it can execute all the CPU instructions.

The non-root mode is triggered when the guest OS wants to enter the kernel mode. The CPU then switches the flag to tell that the instructions are running in the non-root mode. Guest OS is not aware of this and thinks that it is running in the Ring 0 but host OS stays protected.

Let's see and analyze concepts used for virtualization on Linux machines.

We need to break down a lot of components here:

- Multiple Guest OS'es

- Qemu

- Kernel + KVM

- Hardware

- Privilege rings (Already explained)

Multiple Guest OSes are isolated operating systems that in fact think that it is the only operating systems running on the hardware.

Qemu - A generic and open-source machine emulator and virtualizer.

QEMU’s system emulation provides a virtual model of a machine (CPU, memory and emulated devices) to run a guest OS. It supports a number of hypervisors.

https://www.qemu.org/docs/master/system/introduction.html

So Qemu is offering hardware emulation and virtualization. Qemu enables emulation of the serial ports, networks, storage, PCI, USB, etc.

As a virtualization tool, it also enables the virtualization of the CPU and Memory but takes a huge toll on the performance if used without hardware-assisted virtualization.

Qemu also allows translating the instruction set to be able to run on the incompatible CPU. Eg. Arm on x86-64.

KVM is a kernel module, directly baked into Linux, which gives you the possibility to use virtualization with Hardware-assisted virtualization CPUs.

KVM turns the Linux into the Type 1 hypervisor and allows the guest OS to run directly on the hardware.

Why QEMU and KVM?

Qemu and KVM can work together. Qemu has the task to emulate the hardware:

- Storage

- Displays

- Network

- USB/PCI/Serial ports

- etc.

When Qemu is starting up the machine it spawns the thread for flagging when the HVM is needed. When guest OS needs to enter kernel space it contacts the KVM to switch to the non-root mode. VM code is then executed directly on the CPU while the other hardware is emulated by the Qemu.

Qemu without KVM

If the Qemu is used in the standalone mode (Without KVM) before the execution of the VM code, the code itself is recompiled by the Qemu, and after that VM code is started executing.

The Qemu uses Just-in-Time compilation. Which is a continuous process of recompilation in the runtime period.

KVM without Qemu

Qemu can be left out and virtual machines can be run directly on the KVM. KVM in this case handles CPU and memory virtualization without the possibility to emulate other hardware.

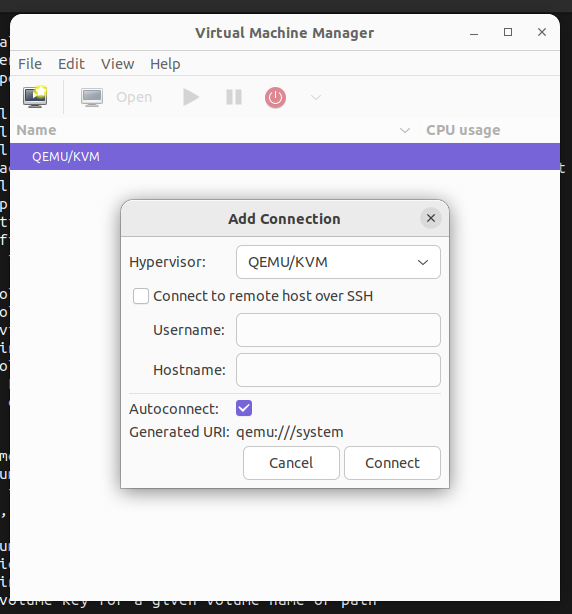

Managers

Now in the picture comes the libvirt. Libvirt is an open-source API, daemon, and management tool for handling virtualization technologies.

For example, virsh is built using libvirt which gives you a management tool for virtualization on Linux.

$ virsh --help

virsh [options]... [<command_string>]

virsh [options]... <command> [args...]

options:

-c | --connect=URI hypervisor connection URI

-d | --debug=NUM debug level [0-4]

-e | --escape <char> set escape sequence for console

-h | --help this help

-k | --keepalive-interval=NUM

keepalive interval in seconds, 0 for disable

-K | --keepalive-count=NUM

number of possible missed keepalive messages

-l | --log=FILE output logging to file

-q | --quiet quiet mode

-r | --readonly connect readonly

-t | --timing print timing information

-v short version

-V long version

--version[=TYPE] version, TYPE is short or long (default short)

commands (non interactive mode):

... From the GUI aspect - virt-manager is the tool for managing the virtualization using libvirt.

We can see that this virt-manager is already connected to the running hypervisor (QEMU/KVM combination discussed above).

Both tools can connect to different hypervisors but in this case, it's QEMU/KVM.

Conlusion

This article clearly distinguishes different parts of virtualization on Linux. This is a foundation for the next part where we will read about containers. Since the containerization is using somewhat similar concepts it will help us in understanding how containers work.

Next part: